As HFS reflects on last week’s AWS re:Invent event, it becomes increasingly clear that the firm could lose steam as the migration to the cloud, as a technology platform, gives way to the cloud’s role in making data a business asset.

While AWS’ growth numbers are still holding up, there could be significant changes on the horizon as Google Cloud Platform (GCP) makes up lost ground with its approach to a data-centric cloud environment. In addition, cool kids on the block like Snowflake and Databricks are changing the narrative from commodity cloud to data-driven cloud.

The fact that AWS forced HFS to digest these proceedings online speaks volumes for AWS’ preference to have only analysts in-person who will sing their praises, repeat their rhetoric, and refrain from challenging them. Why risk analysts who dare to talk to enterprises and question the logic of where (and why) they are spending vast amounts of their money with a half-baked cloud vision?

If you move your existing crap into the cloud, you’ll end up with even worse crap… and less than 50% of cloud native transformations are currently successful

AWS continues to cash in by bolstering its commodity cloud offerings and pouring funds into a morass of new products. The result is the significant complexity for enterprise customers to navigate this portfolio and piles pressure on them to seek out increased support from partners with both domain and data experience.

Our research shows that migrating to the cloud is costing enterprises many billions a year, and that cost continues to rise as many enterprises move too fast and fail to fix their underlying data infrastructures. Throw in the massive wage hikes, attrition and skills shortages in the tech space, and the cost of migrating your critical data into the cloud becomes unconscionable, especially at a time when most CFOs are freezing spending in anticipation of a very challenging economic period.

Net-net, you can’t migrate processes and workflows that don’t get you the data you need until you’ve fixed them first. If you move your existing crap into the cloud, you end up with even worse crap, and you just wasted a lot of time and money in the bargain. And you don’t even need to survey what enterprise buyers are spending – you just need to examine the huge growth numbers enjoyed by the majority of consultants and IT services providers in recent years, cashing in on rushed and poorly prepared cloud migrations.

The move away from “all in on hyperscalers” is more a threat to AWS’s bottom line than it is to either of its notable competitors, Azure or Google Cloud, as hosting data, facilitating compute, and managing web storage is now a commodity whose costs-to-ROI is being questioned (although nicely) not as the “move to the cloud” but the “move to hybrid.”

As noted in HFS’s recent Cloud Native Transformation Horizons study, “The buy side is struggling to capture the value of their cloud investments, as very few enterprise customers have a well-defined cloud transformation strategy at an organizational level, which can lead to transformations done in silos.” The results are showing that less than 50% of cloud native transformations are a success…

HFS views Google Cloud Platform as a refreshing future for enterprises considering their cloud migration more strategically to address data in the domain context

The “Cloud” is quickly becoming a commodity platform. Adopting a cloud-native mindset is about leveraging multi and hybrid cloud solutions to deliver business outcomes, empowering employees, customers and partners, all the while managing costs. While AWS is the current leader in the hyperscaler market, it’s clearly feeling the pressure as GCP closes the gap.

HFS has repeatedly been documenting the importance of data. From “forget apps, it’s about the data” to a view on “data is your strategy,” and on modernization, there are many articles where we’ve done deep dives into the importance of data, automation, analysis, visualization, implementation, governance, and partnering to deliver results. We firmly believe that data is crucial to building, maintaining, and growing a robust, resilient ecosystem.

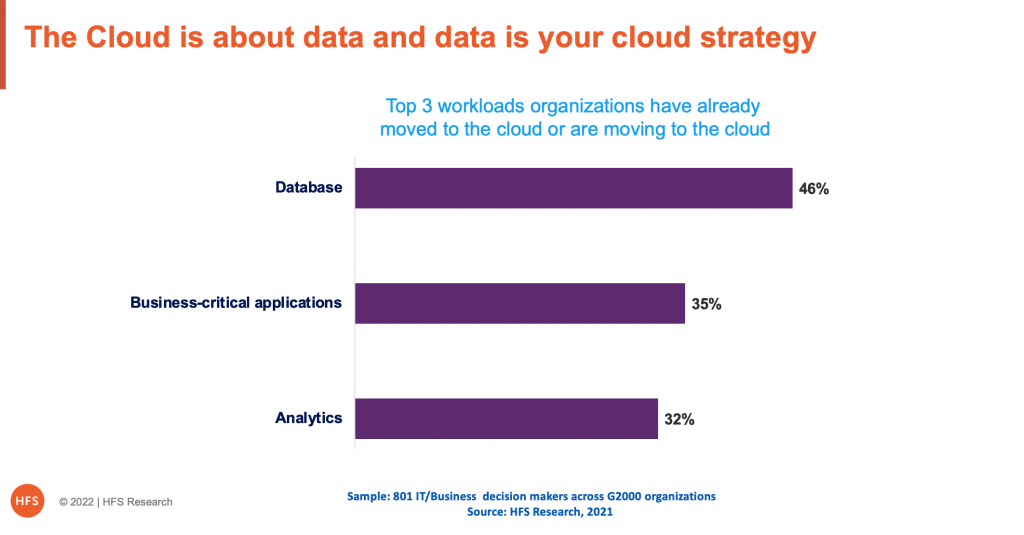

In fact, we flagged this in our HFS Pulse data from early 2021, where Global 2000 firms cited databases as the top workload being moved to the cloud:

The importance of data isn’t new… it’s your business strategy more than ever

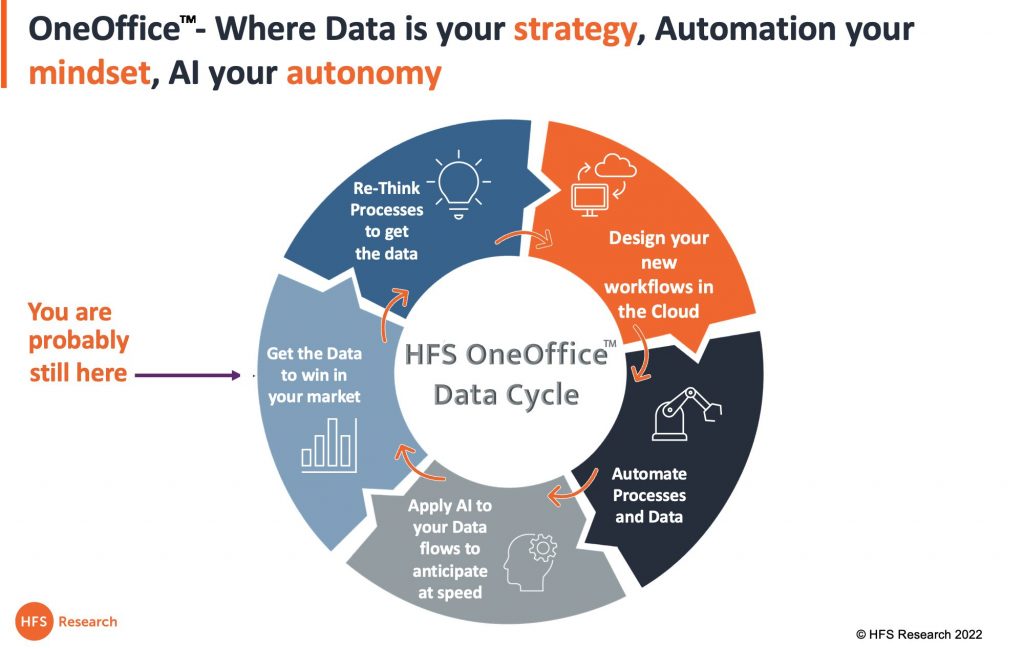

To be a truly autonomous organization that operates in the cloud, the principles of OneOffice hold truer than ever: workflows need to execute in real-time between customers and employees – and engage partners in your ecosystem. OneOffice is about understanding and discovering the data you must have to win in your market – right now in real-time – as the market environment keeps changing.

Google has been an advocate of data for years and tied this theme from their data center to Google Apps used by millions of firms. Yet, if a vendor (ahem, AWS) believes they can win with data, they really must improve how they tell their story if they want to expand their services and revenue opportunities further. And this is where we see GCP closing in fast on AWS – hence the attempt at re:Invent in which AWS attempted to re:Position itself as a data leader as well, which is so critical to the datacycle that drives OneOffice:

Five steps you must take to get the data you need into the cloud

Moving data into the cloud has to be both a business transformation and a technical exercise. You can’t keep separating the worlds of business and IT any longer if you want your workflows to be executed autonomously in the cloud. Business executives must identify the data they need to be effective in making decisions and work with their IT counterparts to build a data structure that can be effectively migrated and operated in a cloud environment:

- Get data to win in your market. This is where you must align your data needs to deliver on business strategy. This is where you clarify your vision and purpose. Do you know what your customers’ needs are? Is your supply chain effective in sensing and responding to these needs? Can your cash flow support immediate critical investments? Do you have a handle on your staff attrition?

- Re-think processes to get this data. Then you must re-think what should be added, eliminated, or simplified across your workflows to source this critical data. Do your processes get you the data you need from your customers, employers, and partners?

- Design your new workflows to be executed in the cloud to deliver the data. There is simply no option but to have a plan to design processes in the cloud over three-tier web-architected applications. In the Work-from-Anywhere Economy, our global talent has to come together to create our borderless, completely digital business. This is the true environment for real digital transformation in action. This means you can’t migrate processes that don’t get you the data you need until you’ve fixed them first. If you move your existing crap into the cloud, you end up with even worse crap and just wasted a lot of time and money into the bargain.

- Automate processes and data. Automation is not your strategy. It is the necessary discipline to ensure your processes provide the data – at speed – to achieve your business outcomes. Hence you have to approach all future automation in the cloud if you want your processes to run effectively end-to-end. This is where you need to ensure connectors, APIs, patches, screen scrapes, and RPA fixes not only support a digital workflow but will scale in the cloud. Many of these patched-up process chains become brittle and fall over in a scaling situation if the code is weak. We’ve seen billions of dollars wasted on botched cloud migrations in recent years because underlying data infrastructures were not addressed effectively, and bad processes became even less effective or completely dysfunctional.

- Apply AI to data flows to anticipate at speed. Once you have successfully automated processes in the cloud, it is easy to administer AI solutions to deliver at speed in self-improving feedback loops. This is where you apply digital assistants, computer vision, machine learning, augmented reality, and other techniques to refine the efficacy of your data. AI is how we engage with our data to refine ourselves as digital organizations where we only want a single office to operate with agility to do things faster, cheaper, and more streamlined than we ever thought possible. AI helps us predict and anticipate how to beat our competitors and delight our customers, reaching both outside and inside of our organizations to pull the data we need to make critical decisions at speed. In short, automation and AI go hand in hand… AI is what enables a well-automated set of processes to function autonomously with little need for constant human oversight and intervention.

Being a leader in data is more than having lots of storage, compute, and add-on SKUs

Connecting data workloads across multi and hybrid clouds, cloud data warehouses, data lakes, and applications is the world we live in. the orchestration of these is crucial and a focal point of projects from the Cloud Native Computing Foundation to Kubernetes.

While both firms are active in the CNCF and have solutions that support K8S, AWS is the more clunky of the two. To be successful, data must flow across internet, storage, and servers; thus, the configuration must be simple to implement and maintain. AWS growth is its own weakness here as tools like managing IP domains to microservices, containers, and Kubernetes are being driven by Google’s efforts ahead of AWS.

The orchestration of data is a prime example. With regards to orchestration, the EKS (AWS version of Kubernetes) has been considered so poor that firms like Red Hat have come to their customer rescue with Red Hat Open Shift for AWS (ROSA). AWS continues to improve its EKS, but it is substantially behind GCP and its customers are leaning on third parties to deliver these solutions.

Domain experience is key and AWS needs partners to deliver this – and they may be disintermediated as a result

AWS is the first to market leader. Our hat is off down for the efforts they put into developing the hyperscaler market. But as the pioneer, much like every innovator they now have a dilemma of how to stay in front while watching both Azure and GCP create more functional solutions for enterprise customers to build their business upon. As GCP continues to ramp the number of certified engineers and experts in core cloud-native solutions like Kubernetes, AWS has found it critical to shoring up partnerships to attempt to lock out the young Turk.

However, while enabling partners to develop and improve on your technology and bring its products in larger domain and ecosystem projects, it opens the door to being disintermediated. A case in point that AWS to rushing to address is AWS outposts. With the rise of hybrid Cloud and the extension of public Cloud, AWS is seeing customers retreat from its hyperscaler services to diversify and reduce costs. Integration firms are partnering with competing compute and data solutions to bring in stateless cloud solutions optimized for the customer domain, not the IT.

We see AWS as a very strong player when it comes to partnerships from a revenue perspective, but GCP is emerging when data is top of mind for enterprises. Partners are leveraging the AWS brand to boost revenues as the complexity of AWS is a challenge for even the most sophisticated organization. With the industry reaching this cloud-data pivot point, the door is wide open for these partners to increase their revenue streams by offering domain expertise, complex integration, and long-term support services. AWS’s own industry solutions lack real drawing power without these partners. And some partners, like IBM, bring tools such as Red Hat Open Shift for AWS (ROSA) that are sorely needed by customers to orchestrate hybrid and multi-cloud solutions.

Data holds the keys to cloud supremacy and AWS knows they are in for a real fight with Google

In Adam Selipsky’s keynote, he brings up the importance of data early (about 15 minutes in for those watching at home). Based on his keynote, “data is the center applications, processes, [and] business decisions. And is the cornerstone of almost every organization’s digital transformation.” Going on to the need for tools, integration, governance, and visualization. Much like what HFS has proposed (and re-iterated recently) as data being your strategic level for everything you do.

In the keynote, Mr. Selipsky spent most of his time on AWS investments across the data modernization value chain of data storage, compute, load management, analytics, governance, and visualization. Several key products to manage, visualize and forecast data were announced; while these are much needed to round out the AWS data story, they also add more SKUs that customers will need help determining how these new solutions map to their ability to implement, train, and manage.

Organizations are being asked to put all their eggs in one basket to take advantage of AWS’s data story as painted by Adam and Swami Sivasubramian (AWS VP Data & ML). And in many cases, while AWS has the first mover advantage, the power, and tools of Google’s Big Query, Cloud Dataflow, and Data Studio.

Key messages from Google Cloud Next that must have shaken AWS

At Google Cloud Next, they announced strategic partnerships with Accenture and HCLTech. These partnerships build on how services firms can merge hyperscalers’ capabilities with the domain-centric intellectual property of services providers. Customers like Snap, T-Mobile, and Wayfair continue to put their trust in Google Cloud’s expertise in data analytics, artificial intelligence, and machine learning and expand the ongoing partnership. Further, Rite Aid signed up with Google Cloud for a multi-year technology partnership that will help its customers with expanded and personalized access to the company’s pharmacists, an enhanced online experience, and intelligent decision-support systems—powered via Google Cloud technologies.

Google is very clear on how data is core to its DNA, and the firm is bringing its knowledge and expertise to market with partners. In fact, many of the mergers and acquisitions of IBM, Accenture, Capgemini, and more are of firms with GCP practices. Data and multi-cloud, delivered through proven user applications to the masses, is clearly the future to democratize decision-making in ways that companies only dreamed up. Making data easy to find and action is bringing velocity to Google – and the likes of Databricks and Snowflake – that can’t be ignored.

We listened to AWS: what we liked and didn’t like

What fell flat (or at least was left in ambiguity):

- Cloud costs are important. In Adam Selipsky’s keynote, he made a show of AWS’s focus on customer, domain, and ESG values. However, he quickly moved to the defensive as less than 10 minutes in he began arguing the Cloud is cheaper to run on than traditional data centers. Citing company examples like Carrier, Gilead, and others and their savings. While these data points may be valid in aggregate, there is little detail or scope on how or what costs were saved – e.g., were they supporting or mission-critical workloads or solutions? The proof is in the data, and that really wasn’t on offer.

- Data is critical, and the lens to analyze hyperscalers is changing completely. AWS wants to be your data partner – but hasn’t really appreciated the complexity of migrating that data to the solutions. Hence the need to lean on the development of a more robust partner ecosystem.

- Millions of choices and crazy product fragmentation. AWS is becoming Baskin-Robbins, with more flavors and choices. Hey, it’s great to have a choice, but there comes a point with too much choice, too many overlapping features and a need to carefully evaluate the pros and cons of each becomes more of a burden than a blessing. For instance, AWS offers 13 databases (8 purpose build (proprietary) and five relation data engines), 12 analytics tools, and 19 AI/ML SKUs! AWS’s increasingly fragmented product catalog is creating challenges for customers and their partners to implement and support.

- AWS focused on technical outcomes, leaving business cases to customers and partners. From Elastic Fabric Adapters to Graviton chips to Redshift product names made up a cluster of new choices that will need to be educated, trained, and validated. AWS focused on what they must buy, not why they are building these.

- AWS’s data democratization story comes up short. Amazon DataZone was pitched as a solution for data users across an organization to deliver market insights. While creation, tagging and governance were strengths, the usability and collaboration components lacked the functionality of Google’s Looker, which offers broader integration with more industry solutions and visualization tools.

What we liked and feel AWS can continue to build on:

- AWS recognizes that data is the cornerstone. re:Invent has joined the data is critical bandwagon. AWS is getting much sharper on its messaging about how it aspires to be a trusted partner in developing solutions for developing data as an asset in the Cloud. With AWS Aurora, they are taking the fight to Microsoft and Oracle, pushing open-source SQL (PostgreSQL-based) solution that has the functionality with lower overhead and costs.

- AWS is improving the marketing of its portfolio to partners. AWS celebrated its software and services ecosystem. This is needed, given how complex the AWS product and marketplace have become. Customers need their trusted partners to help them sort through the AWS offerings to help them choose the right solution for their business.

- Data security got major props. AWS has realized to be a valid place for data, it needs robust security. It has offered some interesting solutions about AWS Security Lake with both its own and partner (Cisco, Snowflake, Palo Alto Networks, etc.) solutions. But how will these security solutions allow for multi-cloud solutions?

- AWS is becoming a marketplace for cloud solution. As Siemens showed in their part of the keynote, they recognize AWS reach to resell their software solutions. By moving their code to AWS, they can drive new revenues streams and provide the market with industry-centric solutions.

- Supply chain tools and insights. AWS Supply Chain Insights is a very interesting solution to see how your ecosystem is working to address data from multiple companies into a singular view for businesses to address inventory, supplier management, and customer outcomes.

The Bottom Line: Objects in the rear-view mirror, may appear closer than they are. In AWS’s rear-view mirror is Google…

As AWS pivots its story from scale, storage, and cloud servers to data the firm further validates Google’s relentless focus on data for the past several years.

AWS has ridden the cloud wave into this very dominant position in the market, but as we have seen, this race has many more cycles to run as we face a deep European recession and an uncertain US market as enterprises grapple with multiple headwinds. Cost is king, and the focus will be on data-driven value as opposed to mere commodity compute. AWS must avoid resting on its laurels if it’s going to keep the likes of Google from eating into its market share with its deep, deep resources and second-mover advantage.

The complexity and large number of choices the average user must now navigate to deploy, manage, and govern AWS investments is creating an opportunity for customers and the global IT Services market to reinvest in their relationships with Google to drive data, multi-cloud orchestration, and user application integration.

Honestly, there was lots to unpack at re:Invent. We, like millions, peered in for hours on the internet, and saw some very cool innovations coming from AWS. However, once compared with a quick ‘google’ to those of Azure or GCP, it lost a fair bit of its innovative luster.

Look out AWS, GCP is coming for you. And it’s coming fast in this choppy, demanding, data-obsessed, and hyperconnected business environment.

Posted in : Analytics and Big Data, Artificial Intelligence, Automation, Business Data Services, Buyers' Sourcing Best Practices, Cloud Computing, Consulting, Customer Experience, Data Science, Employee Experience, Global Business Services, IT Outsourcing / IT Services, Metaverse, OneEcosystem, OneOffice, Process Discovery, Process Mining, Robotic Process Automation, SaaS, PaaS, IaaS and BPaaS, Uncategorized