Month: June 2018

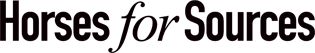

Concentrix gets up close and personal with Teleperformance with its Convergys acquisition

Just a couple of weeks after Teleperformance splashed $1 billion in Intelent we finally see Convergys gobbled upRead More

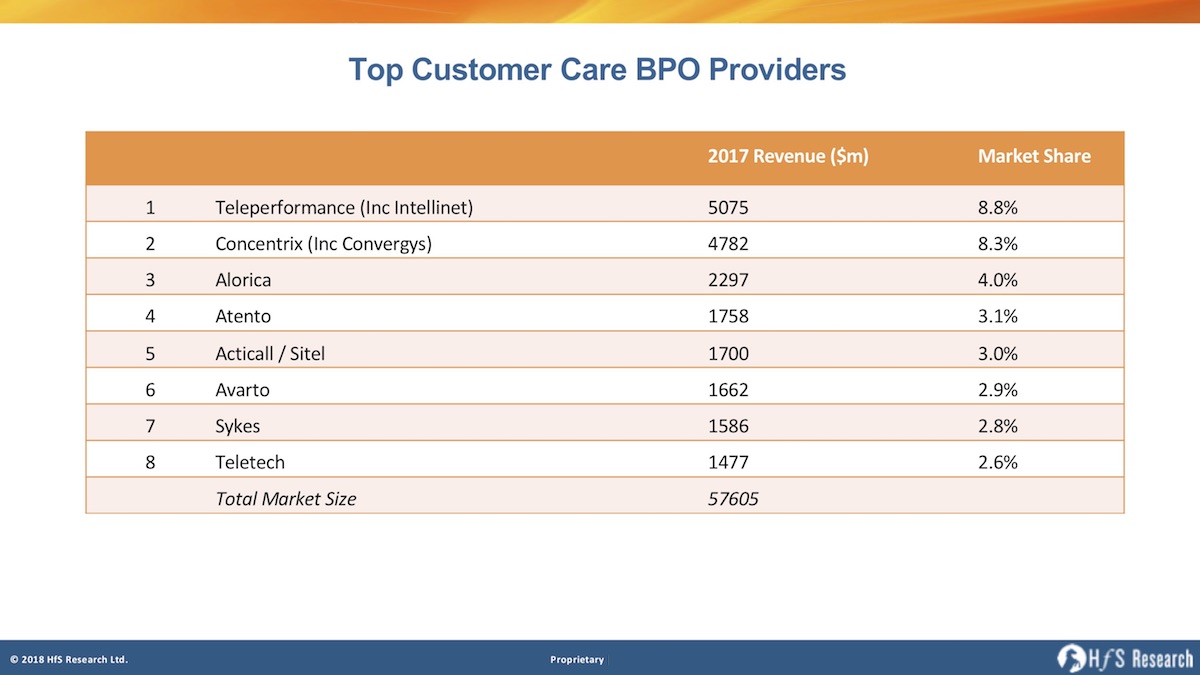

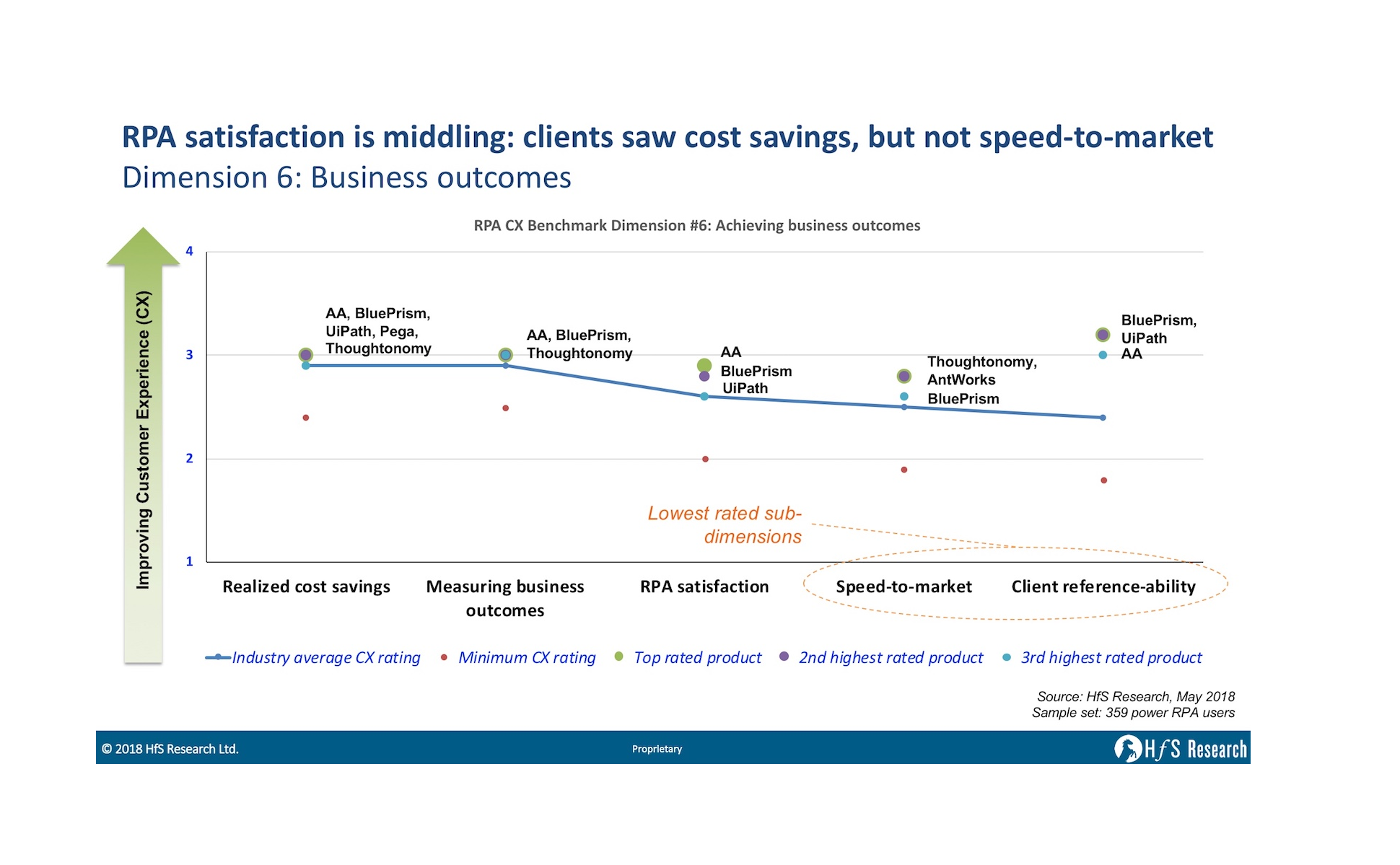

The definitive RPA product benchmarks: The overall picture across 359 superusers

HfS interviewed 359 super-users of RPA products (enterprises, advisors and service providers) across 40+ customer experience dimensions across the following 6 key dimensionsRead More

You just can’t lose… with Chris Boos. Time for an AI reality check

Phil Fersht talks to arago Founder and CEO Hans-Christian BoosRead More

To keep receiving HfS updates, make sure you register now!

Keep up-to-date with the finest change-agent research on RPA, blockchain, AI... and much moreRead More

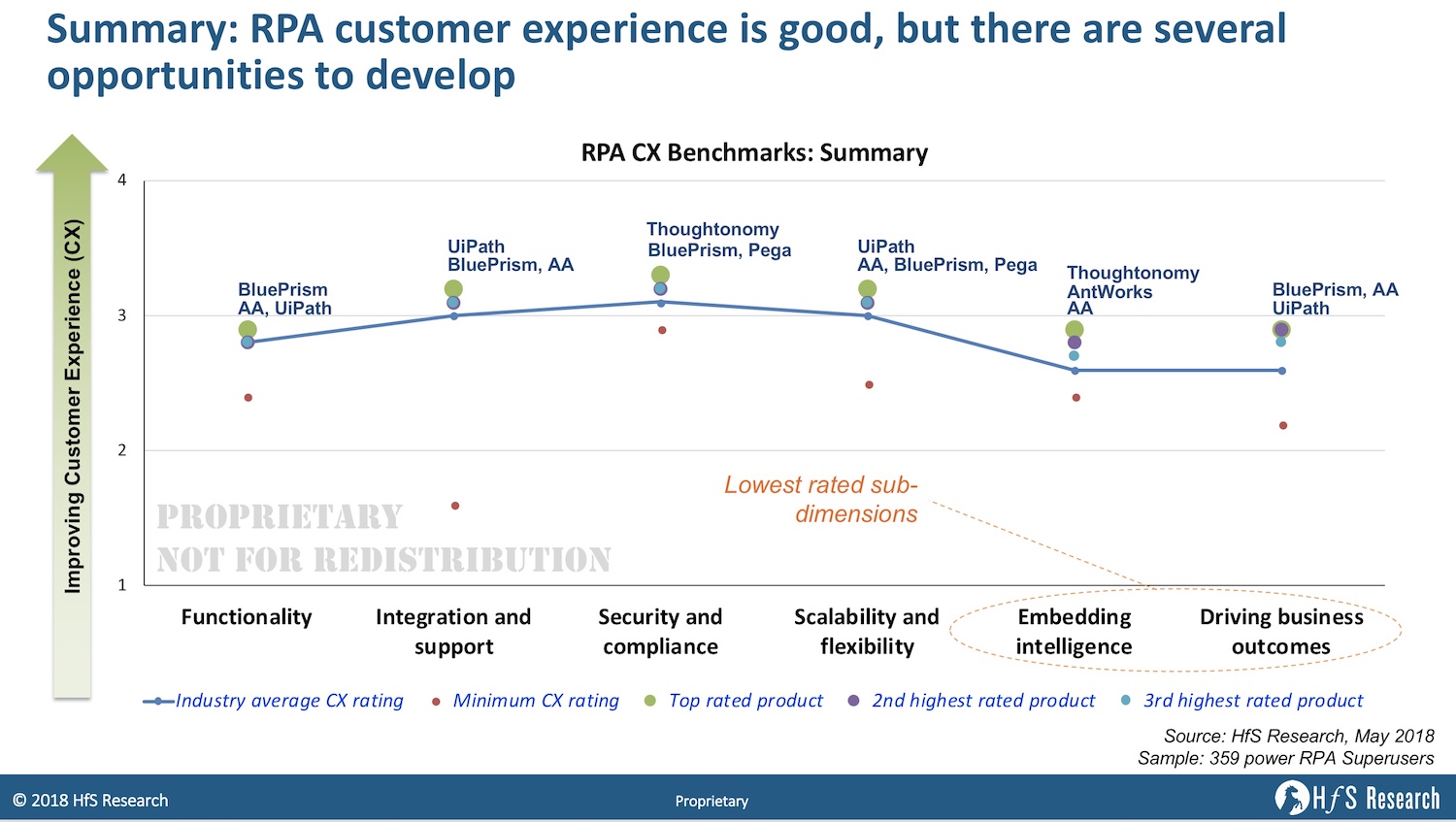

Accenture, IBM, Cognizant, Infosys, Wipro and TCS lead the first Digital OneOffice Blueprint

HfS releases the first official blueprint rating of leading service providers offering multi-functional OneOffice capabilitiesRead More

And time for a real Infosys Saliloquy…

Phil Fersht interviews new Infosys CEO Salil ParekhRead More

Finally the industry has credible RPA product benchmarks from 359 superusers

HfS Research releases the first credible RPA customer experience benchmarking research, covering the leading 10 products across 359 stakeholdersRead More