The revelation that Deloitte submitted a government report filled with AI-generated fake references and fabricated court quotes is not just embarrassing – it is a $290,000 lesson in what happens when professional judgment is replaced by blind trust in AI.

Australia’s Department of Employment and Workplace Relations said Deloitte will return part of the AU$440,000 fee after errors including a fabricated court quote and non-existent references were uncovered by academic Chris Rudge. A corrected version disclosed use of Azure OpenAI GPT-4o after the scandal broke. AI without verification is not innovation. It is professional malpractice waiting to happen.

GPT-4o did not malfunction. Deloitte’s process did.

Deloitte was hired to review a welfare compliance framework and IT system. The report went live, and a single diligent reader exposed fake sources and a bogus court quote. Deloitte then refunded the final payment and disclosed its generative AI use after the fact.

The model did not fail. It produced fluent, plausible text exactly as designed. What failed was process and accountability. Someone generated content, skipped verification, and submitted it to a government client whose decisions affect millions of citizens and billions in welfare payments. The stakes were too high for shortcuts, yet shortcuts were taken.

Enterprise leaders already know the risks, but they’re buying the services anyway

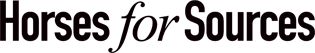

The irony is almost painful. When HFS Research surveyed 505 enterprise leaders across Global 2000 firms in 2024, 32% identified “risk of inaccurate or unreliable outputs, including potential for AI hallucinations” as one of their top concerns when engaging professional services that use AI in delivery. That’s nearly one in three buyers explicitly worried about the exact problem that just cost Deloitte a contract and its reputation:

Yet those same enterprises keep signing deals with firms racing to automate their deliverables without building verification into workflows. The Deloitte scandal isn’t revealing a hidden risk, it’s confirming what enterprise leaders already feared. Even more telling, 44% cited lack of transparency in AI-driven decisions as their top concern, and 28% worried about limited accountability for AI-related errors. The market knows the problem exists. The difference now is that Deloitte’s $290,000 refund puts a price tag on ignoring it. When nearly half of your potential clients are already worried about whether you’re being transparent about AI use, hiding GPT-4o in your methodology until after you get caught isn’t just bad practice, it’s commercial suicide.

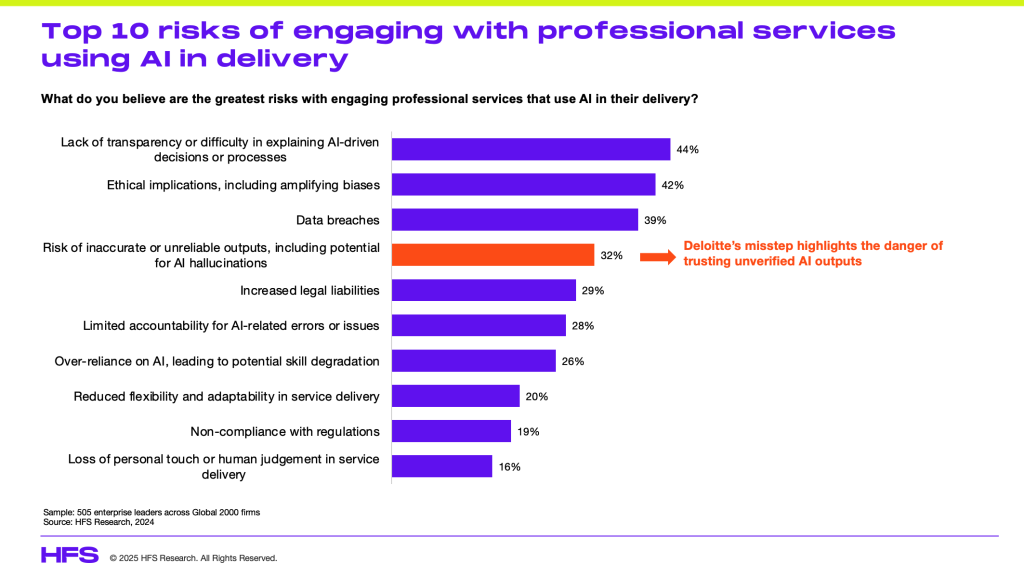

Buyers ranked “ability to balance AI with human expertise” as their fifth most important selection criterion

When HFS Research asked 1,002 enterprise leaders across Global 2000 firms what matters most when selecting an AI-powered consulting firm in 2025, the results expose exactly where Deloitte failed. “Ability to balance AI with human expertise” ranked fifth out of ten criteria, sitting between proprietary IP differentiation and track record of delivering outcomes:

This isn’t a nice-to-have buried at the bottom of the list. It’s a top-five dealbreaker. Yet Deloitte’s approach to the Australian welfare report suggests they treated AI as a replacement for human judgment rather than an amplifier of it. The ranking also reveals something critical about buyer expectations: deep industry expertise still matters most, but the ability to use AI responsibly is now more important than customization, change management capabilities, or vendor ecosystem collaboration. Enterprise leaders aren’t rejecting AI in professional services. They’re demanding that firms prove they can deploy it without sacrificing the human insight they’re paying premium rates to receive. Deloitte’s scandal shows what happens when a firm optimizes for speed and margin while ignoring the one thing clients ranked in their top five priorities.

Your vendors are using AI right now whether you know it or not

If you buy consulting, strategy reports, audits, or any expertise-driven service, assume AI is already in your supply chain. The question is not if vendors are using it, but how responsibly they are doing so.

Make AI disclosure non-negotiable in every contract starting today. Every agreement must spell out which tools are used, for what purposes, and how verification occurs. “We use GPT-4o for initial drafts, followed by human fact-checking” is accountability. “AI-assisted workflows” is a loophole.

Verify before you act on any deliverable that matters. High-stakes work requires qualified human review of every claim and citation. Do not assume plausibility equals truth. Deloitte was caught by one diligent academic. How many unchecked reports are sitting in your systems right now?

Rewrite acceptance criteria because your current standards assume human work. Add explicit checks for fact accuracy, citation integrity, and full AI disclosure to every statement of work before you sign it.

Create escalation protocols before the next crisis breaks. When a fabricated quote surfaces, who investigates? Who notifies the client? How do you remediate within hours, not weeks? Deloitte’s response was reactive PR. You need prevention built into operations.

The race to automate is hurting service provider credibility

One unverified deliverable cost Deloitte both money and trust. The economic temptation is obvious: use AI to draft faster, bill the same, and pocket the margin. But that margin gain is being bought with a credibility deficit that compounds with every careless report.

This is the dark side of the Services-as-Software era. AI can enable services to behave like scalable platforms, but that only works when the underlying workflows are validated, explainable, and consistently coded for quality. Without these controls, Services-as-Software collapses into Services-as-Spin.

Make verification mandatory for every single AI-assisted output. Every piece of content must undergo human expert review before it leaves your building. Treat verification as a professional obligation, not an optional cost.

Default to transparency because clients will find out eventually. Discovery happens through audits, detection tools, or leaks. Early disclosure builds trust. Concealment destroys it permanently.

Separate creation from review immediately. The person prompting AI cannot be the same one validating its results. Fresh eyes catch errors invisible to the drafter who anchored on what they expected.

Price for integrity, not just AI-enabled margin expansion. If AI improves efficiency, reinvest some savings in stronger validation. Competing on AI-driven speed while starving quality control is reputational suicide.

Most AI transformation programs fail because they optimize for speed over verification

This problem is now systemic across sectors. New York lawyers were sanctioned in Mata v. Avianca after filing briefs with non-existent cases generated by ChatGPT. UK High Court judges have warned lawyers that citing fake AI cases can trigger contempt referrals. Air Canada was held liable after its website chatbot gave a passenger false policy guidance. Media outlets including CNET and Sports Illustrated faced backlash and corrections for AI-generated content riddled with factual errors and fake bylines. Academic publishers have retracted thousands of papers amid papermill and AI-fabrication concerns, with Wiley confirming over 11,000 retractions tied to Hindawi and Springer Nature retracting a machine-learning book after fake citations were exposed.

LLMs can be transformative when they amplify human expertise. Deloitte’s failure was not using AI. It was abdicating accountability by treating AI as a substitute for analysis rather than a partner in it.

This is exactly where Vibe Coding matters. Enterprises that succeed with AI are already teaching their people to code the “vibe” of quality into every workflow. That means aligning how data is validated, how context is shared, and how collaboration flows across the OneOffice. You do not scale trust with technology. You scale it through consistent cultural coding of how technology is used.

The fastest results come from fixing one broken process at a time

Do not try to govern AI everywhere at once. Start where the blast radius is biggest.

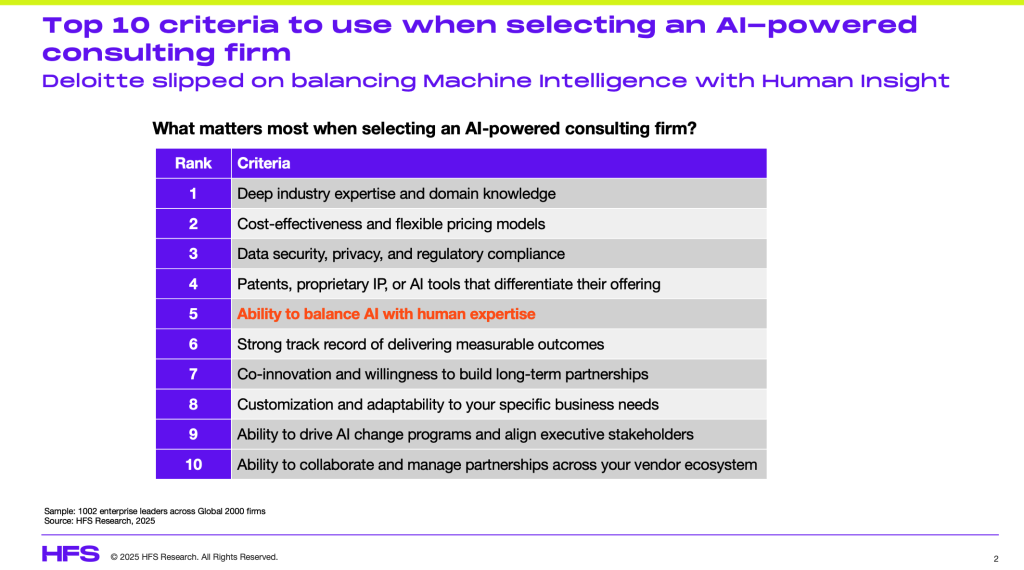

As our recent research across the Global 2000 reveals, the issue with AI transformation isn’t the tech, but the archaic processes that are failing to create better data to make decisions. It’s also the failure of leadership to train their people to rethink processes and be aware of the real business problems they are trying to solve. While so many stakeholders obsess with technical debt, the real change mandate is to address process, data and people debt to exploit these wonderful technologies:

For enterprises: Pick one high-stakes category such as government reports, regulatory filings, audit outputs, or financial models. Build airtight disclosure and verification there first, then scale the approach.

For service providers: Target practices using AI heavily. Make documented verification an essential mandate to client delivery. Track error types and use them to improve prompts, retrieval methods, and quality checklists continuously.

The leaders separating progress from scandal are those who embed quality control at the start, not those scrambling to bolt it on after public failure.

Regulatory crackdowns are coming and 60% of firms have no AI governance plan

Courts are already adjusting. US judges have begun issuing standing orders that require lawyers to certify whether filings used generative AI and to verify any AI-drafted text. The UK High Court has warned that submitting fictitious AI-generated case law risks contempt or referral to regulators. At the policy level, NIST has published its Generative AI Profile as companion guidance to the AI Risk Management Framework with concrete control actions organizations can adopt now.

Governments burned by AI blunders will introduce binding standards. Professional associations will issue mandatory guidelines. Clients will add AI clauses to every contract with real penalties. The firms that move now, investing in transparency, training, and verification, will win trust and market share. The rest will be litigating their way through the next cycle of embarrassment.

We are in a period where AI capability has outpaced corporate discipline. Old QA checklists miss AI-specific failure modes like hallucinations and fake citations. Old pricing models ignore the real cost of verification overhead. Old disclosure norms hide behind marketing language that protects no one.

Bottom line: AI without verification is outsourcing judgment to a system that confidently invents facts.

Deloitte’s scandal is not an outlier. It is the first major warning shot of a much larger credibility crisis coming for every industry. The shift to Services-as-Software and Vibe-Coded enterprises is about replacing legacy human-only workflows with intelligent, accountable, and transparent ones that combine machine efficiency with human integrity. Build this discipline into your operating model now or explain the next scandal later when your name hits the headlines.

Posted in : Artificial Intelligence, ChatGPT, Uncategorized