Robotaxis are driving around San Francisco – and no one knows who is liable when they kill someone

AI is integrating itself into your everyday life more than you know. Your robot vacuum maps your home and Eufy knows you left your dog’s water bowl out last night. Farmers use AI to optimize planting schedules for your Thanksgiving vegetables. The technology has proven itself a trusted companion in mundane tasks, but robotaxis represent something fundamentally different: this is the first time AI demands we surrender control over life-and-death decisions at scale.

Big tech leaders are betting you’ll jump into AI-fueled robotaxis. However, these represent one of the first genuine examples of AI requiring behavioral change at a societal level. However, the technology isn’t yet ready to scale, consumers are hesitant to trust it, and we haven’t addressed the deeper question: who’s accountable when the algorithm gets it wrong?

Waymo has driven 20 million miles – and still can’t legally drop you at your front door

Self-driving taxis aren’t science fiction. Uber has partnered with Waymo to make them accessible to their client base. In China, companies like Baidu are clocking millions of autonomous miles. You might not see them, but robotaxis are already on the roads, and they’re exposing the AI Velocity Gap in real-time: the technology is moving faster than society’s ability to adapt, regulate, or trust it.

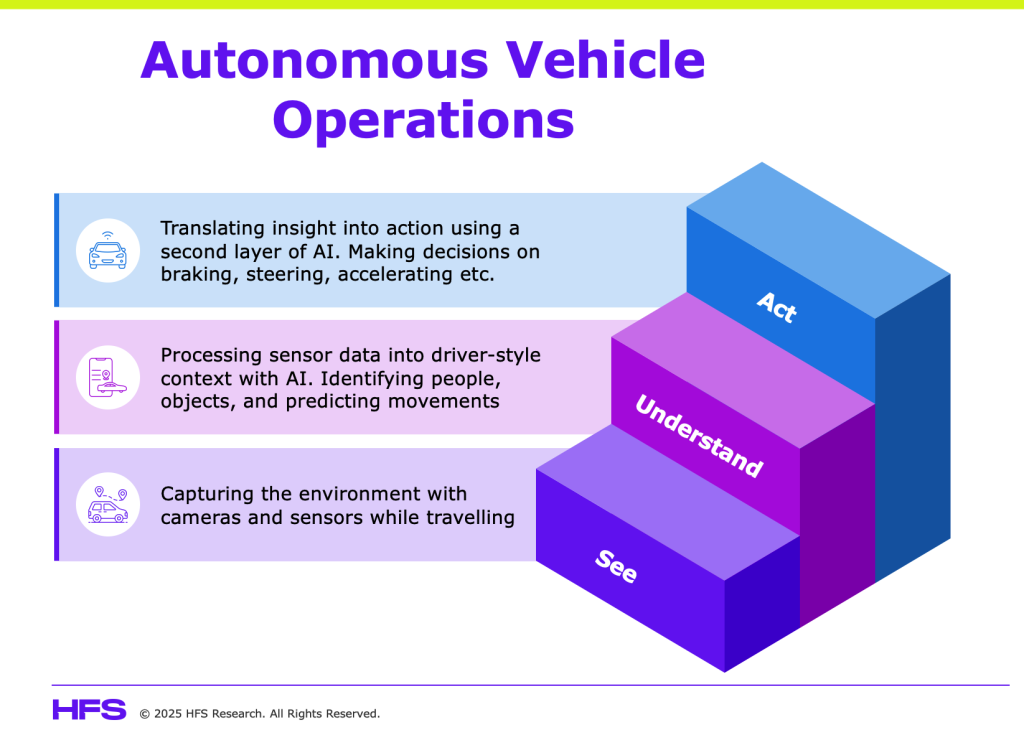

Despite autonomous driving sounding complex, it’s built on three simple layers: the ability to see (sensors and cameras), understand (AI models processing real-time data), and act (algorithms making split-second decisions). These three layers combine to create the digital driver of every robotaxi you see today. Each layer is another element humans must trust to function correctly when jumping in for a ride. And that’s where the model breaks down:

Robotaxis have already killed a cat, passed school buses illegally, and hit pedestrians – and no one knows who’s liable

We know from our work with enterprises that AI struggles when reliability and edge cases collide. It needs clean, consistent data to make accurate decisions. Waymo has logged millions of controlled driving hours. Companies like Volvo leverage digital twins to test dangerous scenarios. It’s still not enough. They’re not yet equipped with the data to handle every life-changing decision, and the result is high-stakes errors and an incomplete experience.

Robotaxis are geofenced to specific streets, leaving them unable to deliver the door-to-door experience people expect from traditional services. We’ve already seen Waymo vehicles illegally pass school buses, a neighborhood cat killed when sensors failed to detect it, and Baidu vehicles colliding with pedestrians. These instances are rare, but the consequences are catastrophic. And they expose Leadership Debt across the industry: who owns the decision when the algorithm fails? The manufacturer? The city that approved the route? The passenger who chose to get in?

This is before we discuss bad actors. Prime Video’s Upload centers around a character killed when his robotaxi is hacked. There might be blockbuster overindulgence, but it highlights just how disastrous weaponized autonomy could be. If your navigation system can be compromised, so can your ride.

China is clocking millions of autonomous miles while the US debates every fender bender – neither approach solves trust

Despite being two of the most technologically advanced countries, the rollout of robotaxis looks completely different across the US and China. The US is adopting a regulatory-led, phased approach where every incident triggers political pressure to enhance restrictions that slow progress. China has taken a much lighter approach, allowing Baidu to clock millions of autonomous miles, which builds a robust dataset for exception handling.

China wins the scale battle… the US wins the trust battle. The reality is that both are crucial if robotaxis are going to become mainstream. Trust without scale is pointless. Scale without trust is dangerous. And neither country has solved the velocity problem: how do you move fast enough to capture the learning while moving slow enough to earn public confidence?

Trump’s December 2025 AI executive order just traded state-level chaos for a federal accountability vacuum

President Trump’s December 2025 AI executive order signals a significant shift toward lighter federal oversight and preemption of state regulations. The order directs federal agencies to challenge state AI laws viewed as burdensome and aims to create uniform federal policy rather than a patchwork of local rules. For robotaxi developers, this could reduce regulatory fragmentation that currently slows deployment across jurisdictions, potentially accelerating testing and commercial rollout.

However, here’s the problem: the order doesn’t establish comprehensive federal safety standards for high-risk AI systems, such as autonomous vehicles. Critical questions around oversight, safety thresholds, and liability remain unresolved. Robotaxi firms may gain regulatory predictability at the national level, but they’ll face ongoing legal and political pushback from states seeking to enforce their own safety protections. California won’t abandon strict testing requirements just because the White House says so. States that experience fatal incidents won’t wait for federal standards before imposing bans.

The result is a mixed landscape that yields no solution. Robotaxi firms get neither clear federal guardrails nor freedom from state intervention. They get jurisdictional conflict without accountability. China operates under unified national AI governance with clear safety standards and rapid iteration. Trump’s order provides American robotaxi firms with regulatory uncertainty, masquerading as innovation policy, which complicates real-world scaling while claiming to accelerate it.

Society trusts humans who make fatal mistakes daily but won’t trust AI that could be statistically safer – the paradox is killing adoption

The reality is that people don’t trust AI with their lives, which is why we haven’t seen widespread acceptance of robotaxis. The stakes are much higher than letting technology choose your next movie or draft an email. One misstep in a robotaxi can be catastrophic. But the same is true for human drivers, which makes robotaxis a case study in societal change management, not just engineering.

We trust humans to drive because we understand their mistakes – fatigue, distraction, bad judgment. We also believe we can intervene. Grab the wheel. Yell “stop.” The same cannot be said for robotaxis. They lack the “oops I didn’t see that cyclist” moment you might have in a traditional taxi. There’s no negotiation, no eye contact, no human accountability in the moment. It’s blind trust or nothing.

This creates a paradox: countless research papers tell us robotaxis will eventually be safer than human drivers. They don’t drink, get tired, or check their phones. But they need to drive the miles – and make the mistakes – to get there. Society must absorb the cost of its learning curve, and we haven’t agreed to that contract. Waymo, Baidu, and other robotaxi firms aren’t just building technology. They’re asking society to rewrite the rules of accountability, liability, and trust. And they’re doing it without admitting that’s what they’re asking for.

Millions of driving jobs will vanish when robotaxis eventually scale – and tech firms are treating displacement as someone else’s problem

Beyond safety, there’s an economic and social disruption no one is discussing openly. Ride-hailing and taxi drivers represent millions of jobs globally. Truck drivers, delivery drivers, and logistics workers are next. If robotaxis scale, entire labor markets collapse. That’s not speculation – it’s math. The industry’s response so far has been to treat displacement as an externality, rather than a design problem.

This isn’t just about technology replacing jobs. It’s about Leadership Debt at a societal level: the failure to plan for what happens when automation moves faster than workforce transition, social safety nets, or political consensus. We’ve seen this movie before with manufacturing automation. The difference is that robotaxis will hit urban labor markets where political consequences arrive faster and hit harder.

Bottom line: Stop pretending robotaxis are a technology problem waiting for better algorithms.

They’re a trust problem, an accountability crisis, and a social contract no one agreed to. The AI Velocity Gap will become permanent if tech firms keep moving faster than society’s ability to absorb the consequences. China solved this with unified governance. The US created regulatory chaos. And until someone admits robotaxis require societal infrastructure – not just better sensors – autonomous vehicles will never leave their geofenced zones

Posted in : AGI, Artificial Intelligence, Automation, Change Management, GenAI